UMDCTF 2024 Writeups

.;,;.? .;,;..

Key Recovery

Oops, Paul’s private key was in the ornithopter when he crashed it. It’s a bit damaged, but can you recover it?

Attachment: out.txt, encrypt.py, modified.pem

5 solves, 496 points

Challenge modified.pem

-----BEGIN PRIVATE KEY----- MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCW5dDBVxDUCBNa Ij3Pb1WotuEevhrG57FA/U1pjRFb7T+uF53FT4PebJgmSVF8jREhWH4dn//7TK tMdIS+zMhZO9aN/Gi3z+kOfDBky7uB+7u7J+6MWsHc/o7EiQ/dG0bHaekXfC fBJaq9KRsKbPyCnqepsO8JC2RRAh2PPlUuLBoCcK8MPz+mw5j+ovD7vgzh ht0m1Kqc4sDlyjiYq6ZG/Y0PqTO4zvBTnDFN4YH+1wUZwEytW1zURG/X mgMvnSomUMuiOpAAlXXXZRFYp1pkEbzhSdUYseCTXkdwlsAT0x2+aT Gt6vgzoJAgMBAAECggEAB7FcqwnYxXtJ6hQjAyj0yX6iGHKho9bn wZjaBfEC7IgSjUHuO3bOBkPgKuHfmq/ZRWOLlOx5L7rcztDpA5 yK4N45/TUzrldBIqGulnU2O2/AHqt8nDEgYdKDrO2xDFJR4N yiLMjkj9ldMC6dRKBrRvZ0xn9E6626W9s0eU+gVhBkKgJQ Nhp36voGmWqzyci+ykQ4qZamuESesY+IaOdUh3fsrI9v 9Hdgq8eqDI27izDzyVLkQ5zIYgAsrVRiiXubUsE2Rw HP3OtUc/NeLFnC7LRQhOxZOxhGV29PZ7IXyxPm4a +4o0pr11U76BHLLs6HnARpwESmPn+CSzT3ybQB zVkABlLRmC518wyXWOTp4XCLjwKBgQC+FTnA 1UUX6ktxlmuuTAuuuWQye5dgIQJPMAqN6Z cTijylwEh6gMxibBtiSND55cC8LGcjRc uutrHn/05wKBgQCa34sqtAcmn1U2K6 03ZLPOB7b74ndmBqbccbkYXljoxe PKAhUEHI2WRxnS4nxB+K7CWuKX iAZccEl7Ns2ZolJJdHvIS0jq QVf8w481PGHUyidYeEghOS EYHhHiSvzFTPNUfV/KJX XYoMIrOjuTPL4R0ldm KtuMdViZR2BcFITn X/D8vi4h5+Xk2/ -----END PRI

We get a wounded RSA key and an encrypted ciphertext. To kick things off we can mask out the parts of the key that were lost and identify what parameters we have left.

Parse key

import base64 with open("modified.pem", "r") as f: modified = f.read() modified = modified.strip().split("\n")[1:-1] modified = [line.ljust(64, "?") for line in modified] modified = "".join(modified) unkchar = "?" pad = "" key_b64 = modified key_bytes = base64.b64decode( key_b64.replace(unkchar, "A") + pad ) key_mask = base64.b64decode( "".join([ "/" if i != unkchar else "A" for i in key_b64 ]) + pad ) bufs = [key_bytes, key_mask] def take(l, desc="?", show=True, full=False, check=None, checkhex=None): if check is None and checkhex is not None: check = bytes.fromhex(checkhex) if check: assert int(check.hex(), 16) & int(bufs[1][:l].hex(), 16) == int(bufs[0][:l].hex(), 16) if show: print(desc) for i in range(2): if l < 30 or full: print(bufs[i][:l].hex()) else: bitcount = bin(int(bufs[i][:l].hex(), 16)).count("1") n_set_bits = [f"{bitcount}/{l*8}"] if i == 1 else [] print(bufs[i][:l][:15].hex(), "...", bufs[i][:l][-15:].hex(), *n_set_bits) print() ret = [] for i in range(2): ret.append(int(bufs[i][:l].hex(), 16)) for i in range(2): bufs[i] = bufs[i][l:] return ret take(4, "struct header", show=False) take(3, "int length 1, version 0", show=False) take(15, "sequence ? keytype", show=False) take(4, "octet stream header", show=False) take(4, "sequence header", show=False) take(3, "int length 1, version 0", show=False) take(4, "int length 0x101 (for n)") n, nmask = take(0x101, "value of n") take(5, "int length 0x03, value of e = 65537", checkhex="0203010001") e = 0x10001 take(4, "int length 0x100", checkhex="02820100") d, dmask = take(256, "value of d") take(3, "int length 0x81", checkhex="028181") p, pmask = take(129, "value of p") take(3, "int length 0x81", checkhex="028181") q, qmask = take(129, "value of q")

int length 0x101 (for n) 02820101 ffffffff value of n 0096e5d0c15710d408135a223dcf6f ... 6930000000000000001adeaf833a09 ffffffffffffffffffffffffffffff ... fff000000000000000ffffffffffff 1876/2056 int length 0x03, value of e = 65537 0203010001 ffffffffff int length 0x100 02820100 ffffffff value of d 07b15cab09d8c57b49ea14230328f4 ... 9cc862002cad5462897b9b52c13647 ffffffffffffffffffffffffffffff ... ffffffffffffffffffffffffffffff 1568/2048 int length 0x81 000000 f00000 value of p 00000000000000000000000000001c ... 52d1982e75f30c9758e4e9e1708b8f 0000000000000000000000000000ff ... ffffffffffffffffffffffffffffff 620/1032 int length 0x81 028181 ffffff value of q 00be1539c000000000000000000000 ... 0000000000000000baeb6b1e7ff4e7 ffffffffff00000000000000000000 ... 0000000000000000ffffffffffffff 492/1032

Unlike previous

wounded key

challenges covered here, this key has

extra header data consistent with an RSA key generated by openssl genrsa as

compared to ssh-keygen and even is missing parts of n.1

Note that the low 56 bits of all are intact. From this we could solve for where by working modulo . can be approximated with where (with high probability) is small enough to brute force.

bits_for_k = 56 mod = 2 ** bits_for_k def M(x): return x % mod lhs = M(d * e) rhs = M((p-1) * (q-1)) found_gk = None for g in range(100): for k in range(1, e): if M(g * lhs) == M(k * rhs + 1): found_gk = (g, k) print(f"Found {g=} {k=}") assert found_gk is not None g, k = found_gk

Found g=10 k=33411

We can use the relations and as constraints for a branch and prune approach to solve for unknown parts of by iteratively solving for these values with increasing modulus. We start from a trivial solution , then using solutions we can enumerate all solutions :

- Begin with some which satisfies the above constraints

- Consider the possible values of

- If the bit in is unknown, either or

- If the bit in is known and set,

- If the bit in is known and not set,

- The same can be said of

- If bits across the four values are unknown, we now have possible solutions

- Remove any solutions which do not satisfy and

- We now have some solutions valid under

We can analyze the low 1024 bits of the masks of to find that at any bit position at most two values are unknown, so at most 4 new solutions are created at each branch.

from collections import Counter masks = [nmask, dmask, pmask, qmask] print(Counter([ ([bool(m & (1 << b)) for m in masks]).count(False) for b in range(1024) ]))

Counter({2: 488, 1: 348, 0: 188})

Since we have two constraints, there is roughly a chance that an invalid solution passes. This means the expected number of solutions after pruning will be at each step and (with high probability) we can efficiently enumerate all solutions up to to reveal .

Branch and prune

from itertools import product from gmpy2 import is_prime params = { "n": (n, nmask), "d": (d, dmask), "p": (p, pmask), "q": (q, qmask) } def get_candidates(name, cur, fiddle): val, mask = params[name] if fiddle & mask: return [cur + (fiddle & val)] else: return [cur, cur + fiddle] endpos = 1024 results = [] def branch_and_prune(pos, solution): if pos == endpos: results.append(solution) print(solution) return fiddle = 2**pos mod = fiddle * 2 candidate_solutions = product( *[get_candidates(name, cur, fiddle) for name, cur in zip("pqnd", solution)] ) for next_solution in candidate_solutions: next_p, next_q, next_n, next_d = next_solution if (next_p * next_q) % mod != next_n: continue if ((next_d * e - 1) * g) % mod != (k * (next_p - 1) * (next_q - 1)) % mod: continue branch_and_prune(pos + 1, next_solution) branch_and_prune(0, (0, 0, 0, 0)) print(f"{len(results)=}") # there's two candidate d but the p/q are the same assert results[0][:2] == results[1][:2] p, q, n, d = results[0] # sanity check primes assert is_prime(p) assert is_prime(q)

(1427102445[...]5029074831, 1334807604[...]8175631591, 1712694523[...]7682291209, 8278934094[...]8118550087) (1427102445[...]5029074831, 1334807604[...]8175631591, 1712694523[...]7682291209, 9816359083[...]0230618695) len(results)=2

Finally, decrypt the flag.

Decrypt

from Crypto.PublicKey import RSA from Crypto.Cipher import PKCS1_OAEP n = p * q phi = (p-1) * (q-1) d = pow(e, -1, phi) key = RSA.construct((n, e, d)) cipher = PKCS1_OAEP.new(key) print(cipher.decrypt(open("out.txt", "rb").read()))

b'UMDCTF{impressive_recovery!_i_forgot_to_tell_you_this_but_the_private_key_ends_with_VATE KEY-----}'

attack of the worm

the fremen are trying to sabotage the spice harvest and they need your help! spice harvesters have worm image recognition technology to know when to avoid a worm attack. luckily for you, a hacker genius got access to the pixels of the worm image, but you can only change up to 30 pixels of it. can you help the fremen destroy a spice harvester?

NOTE: the model is the same as

the worm strikes back

nc challs.umdctf.io 31774Attachment: model.pt, server.py, worm.png

11 solves, 491 points

We get a whitebox implementation for a resnet18 model with a custom dense

classifier layer that outputs how “worm” the image is. We also get a fixed input

image. By changing 30 pixels of the fixed image RGB data we need to make the

image < 0.5 worm.

import numpy as np from PIL import Image import torch import torch.nn as nn from torchvision.models import resnet18 model = resnet18() model.fc = nn.Linear(model.fc.in_features, 1) model.load_state_dict(torch.load('model.pt')) model = model.cuda() original_worm = np.array(Image.open("worm.png")) original_worm_x = torch.tensor(original_worm.transpose(2, 0, 1) / 255.0, dtype=torch.float32).unsqueeze(0).cuda() original_worm_y = float(torch.sigmoid(model(original_worm_x))) print(original_worm_y, original_worm_y < 0.5)

0.5788716077804565 False

Neural networks are trained by feeding it input, calculating the difference (a.k.a “loss”) between the model’s prediction and ground truth answers and using calculus to find out how each parameter in the neural network should change to minimze the loss (a.k.a “gradients”). We can extend this fancy calculus to the input data as well to “train” our input so that it produces a desired output. This process is called adversarial generation and for a more comprehensive introduction I’d recommend this writeup by Zeyu.

The challenge restricts us to editing only 30 pixels of the input image. Once we decide what set of pixels we want to change, we can run the model on the input image, calculate the loss that is minimum when the image is “not worm”, backpropagate it and only apply changes to the selected pixels.

# pipeline(image ndarray) => loss, image gradient def pipeline(inp): x = torch.tensor(inp.transpose(2, 0, 1) / 255.0, dtype=torch.float32).unsqueeze(0).cuda() x.requires_grad = True model.zero_grad() loss = torch.sigmoid(model(x)).sum() (-loss).backward() return float(loss), x.grad[0].cpu().numpy().transpose(1, 2, 0) assert pipeline(original_worm)[0] == original_worm_y # optimize(image ndarray, list of pixels to allow changes, learning rate schedule) # => loss, new image def optimize(img, selected_pixels, lr_schedule): mask = np.zeros((224 * 224, 3)) mask[selected_pixels] = np.ones(3) mask = mask.reshape((224, 224, 3)) img = img.copy() for lr in lr_schedule: y, grad = pipeline(img) masked_grad = grad * mask # only change the selected pixels img = img - masked_grad * lr img = img.clip(0, 255) return y, img

To decide which pixels to adjust, a greedy approach can be used. Randomly sample a pool of “candidate pixels” and for each pixel generate an image allowing changes in this one pixel’s data. The “candidate pixel” with the largest improvement in loss means it has significant infuence on the classifier output and can be added to a pool of “unstable pixels”. More rounds of sampling and generation can be done but now allowing changes to the all previous “unstable pixels” as well as the new “candidate pixel” for each image. We terminate once 30 unstable pixels have been found and this subset of pixels is likely sufficient to generate a image which fools the classifier.

Greedy search and adversarial generation

from random import seed, randrange # guesstimated parameters SEED = 1116 SHORT_LR = np.logspace(4, 3, num=15) LONG_LR = np.logspace(4, 2, num=60) VERY_LONG_LR = np.logspace(4, 2, num=500) TRIALS = 30 not_worm = original_worm.copy() unstable_pixels = [] seed(SEED) while len(unstable_pixels) < 30: candidate_pixels = [] for _ in range(TRIALS): while True: candidate_pixel = randrange(0, 224 * 224) if candidate_pixel not in unstable_pixels: break # optimize a new image using this candidate pixel y, _ = optimize(not_worm, unstable_pixels + [candidate_pixel], SHORT_LR) candidate_pixels.append((y, candidate_pixel)) # pick the candidate pixel with best loss _, next_pixel = min(candidate_pixels) unstable_pixels.append(next_pixel) # optimize the current not_worm image with the current list of unstable pixels y, not_worm = optimize(not_worm, unstable_pixels, LONG_LR) print(f"Step {len(unstable_pixels): >2}: next_pixel = {next_pixel: >5} y = {y:.4f}") # final optimizations with slow learning rate annealing y, not_worm = optimize(not_worm, unstable_pixels, VERY_LONG_LR) print(f"Final y = {y:.4f}") assert y < 0.5 # make sure the image still classifies properly when rounded to u8 not_worm_uint8 = not_worm.astype(np.uint8) y = pipeline(not_worm_uint8)[0] print(f"u8 image y = {y:.4f}") assert y < 0.5

Step 1: next_pixel = 39814 y = 0.5681 Step 2: next_pixel = 31882 y = 0.5622 Step 3: next_pixel = 34264 y = 0.5553 Step 4: next_pixel = 9103 y = 0.5493 [...] Step 21: next_pixel = 2242 y = 0.5007 Step 22: next_pixel = 23979 y = 0.4977 Step 23: next_pixel = 45967 y = 0.4963 Step 24: next_pixel = 6436 y = 0.4964 Step 25: next_pixel = 43330 y = 0.4935 ... Step 29: next_pixel = 10952 y = 0.4878 Step 30: next_pixel = 21210 y = 0.4863 Final y = 0.4850 u8 image y = 0.4851

We can send the final image to the server to claim the flag.

Payload generation and interaction

from pwn import remote from subprocess import check_output changed = np.where((not_worm_uint8 - original_worm)) changed_px = list(sorted(set(zip(changed[0], changed[1])))) assert len(changed_px) <= 30 payload = [] for y, x in changed_px: r, g, b = not_worm_uint8[y, x] packet = f"{x},{y},{r},{g},{b}" payload.append(packet) payload = ";".join(payload) print(payload) # my ml environment's LD_PRELOAD messes with curl :smiling_face_with_tear: !wget https://pwn.red/pow -O pow.sh -q io = remote("challs.umdctf.io", 31774) io.recvline() pow_chal = io.recvline().strip() pow_chal = pow_chal.replace(b"curl -sSfL https://pwn.red/pow", b"cat pow.sh") pow_result = check_output(pow_chal, shell=True) io.send(pow_result) io.sendline(payload.encode()) print(io.recvuntil(b"}").decode())

2,10,255,141,45;77,27,0,0,0;[...];47,205,19,255,27;128,221,201,44,255 solution: Enter a list of pixels to change, in the format 'x,y,r,g,b;x,y,r,g,b;...': LISAN AL GAIB UMDCTF{spice_harvester_destroyed_sunglasses_emoji}

the worm strikes back

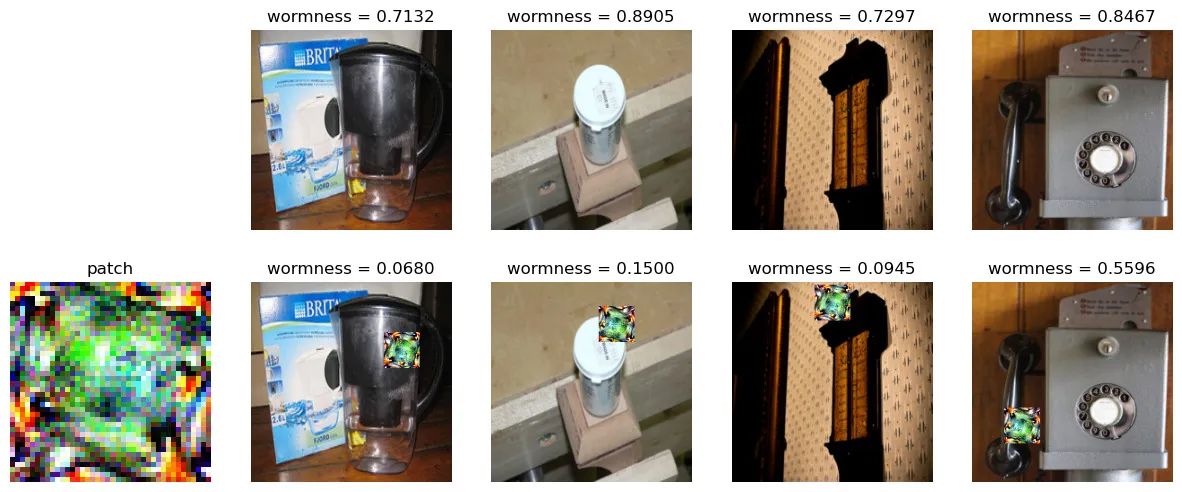

once again, the fremen are trying to sabotage the spice harvest and need you to fool the spice harvester’s worm image recognition! this time though, you need to generate a 40x40 patch to add to the image.

NOTE: the model is the same as

attack of the worm

nc challs.umdctf.io 31775Attachment: model.pt, server.py

1 solve, 499 points

We now need to generate an adversarial patch that is 40 by 40 pixels in size. When applied to a hidden corpus of test images, it should force a prediction of “not worm” on the classifier nerual network.

Several implementations of the famous adversarial patch paper are available for reference but they won’t work out of the box with the challenge, not to mention that the pool of test images is unknown. Though it is not optimal we can use a corpus of imagenet training images to train the patch with and try to re-implement the paper.

Get training data

from glob import glob from random import sample !git clone git@github.com:EliSchwartz/imagenet-sample-images.git BATCHSIZE = 50 impaths = glob("./imagenet-sample-images/*.JPEG") def get_batch(): batch = [] for impath in sample(impaths, k=BATCHSIZE): img = Image.open(impath).convert("RGB") img = T.Resize(size=(224, 224))(img) img = T.ToTensor()(img) batch.append(img) batch = torch.stack(batch) batch = batch.cuda() return batch

According to the paper and the reference implementations I found, the steps needed to make an adversarial patch is to:

- Initialize a patch made of random noise

- Pick an image that does not classify to the desired results

- Pick a random location (transformation) that decides where the patch should be overlayed on top of the input image

- Apply the patch to the image with the selected location

- Calculate model outputs and a loss that is minimum when the desired class is produced

- Backpropagate the loss to the patch and adjust the patch image

- Repeat (4-6) until the image with patch applied is classified to our target

or a maximum number of iterations is reached

- Note that the image from (2) and the transformation from (3) is reused

- Repeat (2-7) with different images and transformations until the patch is effective

I made some adjustments to these steps for my solution:

- For each iteration of (2-7), a batch of 50 images2 instead of a single

image was used for speedup and no exclusion of correctly classified images was

done. The same transformation was applied to all images in the batch. This

also required me to set

model.train(False)when loading the model to disable theresnet18’s normalization layers. - The loss function selected was

relu(model(x) + 0.05)without using any sigmoid function. Images that are “worm”0 < predand “barely not worm”-0.05 < pred < 0will have nonzero loss and propagate changes to the patch. Meanwhile, images that are “confidently not worm”pred < -0.05will have zero loss because of how the ReLU function works and will not propagate changes to the patch.

Solver setup

import numpy as np from PIL import Image import torch import torch.nn as nn from torchvision.models import resnet18 import torchvision.transforms as T model = resnet18() model.fc = nn.Linear(model.fc.in_features, 1) model.load_state_dict(torch.load('model.pt')) model = model.train(False) # !!! disable batchnorm! model = model.cuda() def generate_mask(): rotation_angle = np.random.choice(4) x_location, y_location = np.random.randint(low=0, high=224-40), np.random.randint(low=0, high=224-40) def apply(patch): patch = patch.copy() for i in range(3): patch[i] = np.rot90(patch[i], rotation_angle) applied = np.zeros((3, 224, 224)) applied[:, x_location:x_location+40, y_location:y_location+40] = patch mask = applied.copy() mask[mask != 0] = 1.0 return applied, mask def unapply(applied): patch = applied[:, x_location:x_location+40, y_location:y_location+40] patch = patch.copy() for i in range(3): patch[i] = np.rot90(patch[i], 4 - rotation_angle) return patch return apply, unapply

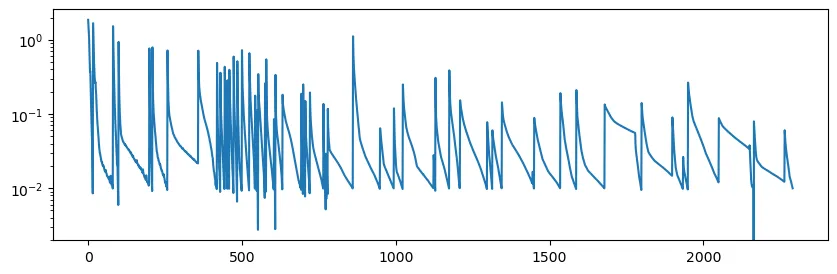

import torch.nn.functional as F from matplotlib import pyplot as plt from IPython.display import display from tqdm.auto import tqdm # guesstimated parameters EPOCHS = 60 MAX_REPS_PER_EPOCH = 100 RELU_EPS = 0.05 EARLY_END_EPS = 0.01 LR_SCHEDULE = np.logspace(1, -1, num=EPOCHS) # logarithmic decay # 1. Initialize a patch made of random noise patch = np.random.rand(3, 40, 40) patch = patch.clip(1/255, 1) # visualiser setup pbar = tqdm(enumerate(LR_SCHEDULE), total=EPOCHS) loss_log = np.zeros([EPOCHS * MAX_REPS_PER_EPOCH]) global_rep = 0 fig, ax = plt.subplots(figsize=(10, 3)) dh = display(fig, display_id=True) for epoch, lr in pbar: # 2. Pick **50 images** regardless of its original output batch = get_batch() # 3. Fix a random location (transformation) that decides where the patch should # be overlayed on top of the input images apply, unapply = generate_mask() for rep in range(MAX_REPS_PER_EPOCH): # 4. Apply the patch to the images at the fixed location applied, mask = apply(patch) applied = torch.from_numpy(applied).float().cuda() mask = torch.from_numpy(mask).float().cuda() applied.requires_grad = True applied_batch = batch * (1 - mask) + (applied * mask) # 5. Calculate model outputs ... preds = model(applied_batch) # ... and a loss that is minimum when the desired class is produced preds_rectified = F.relu(preds + RELU_EPS) loss = preds_rectified.sum() / BATCHSIZE # visualiser loss_log[global_rep] = float(loss) global_rep += 1 if loss < EARLY_END_EPS: # Early stop because loss is low and images in this batch generally # look like "not worm" break # 6. Backpropagate the loss to the patch and adjust the patch image data (-loss).backward() pbar.set_description(f"epoch = {epoch: >2}, rep = {rep: >3} loss = {loss:.4f}") grad = unapply(applied.grad.cpu().numpy()) patch += grad * lr patch = patch.clip(1/255, 1) # visualiser ax.clear() ax.set_yscale('log') ax.plot(loss_log[:global_rep]) dh.update(fig)

Claim flag

import base64 from pwn import remote, context from subprocess import check_output patch_np = (patch.transpose(1, 2, 0) * 255).astype(np.uint8) patch_buf = patch_np.tobytes() patch_b64 = base64.standard_b64encode(patch_buf) # my ml environment's LD_PRELOAD messes with curl :smiling_face_with_tear: !wget https://pwn.red/pow -O pow.sh -q context.log_level = "error" for _ in range(20): # might be unlucky with transformations; try multiple times try: print("Connecting...") io = remote("challs.umdctf.io", 31775) io.recvline() pow_chal = io.recvline().strip() pow_chal = pow_chal.replace(b"curl -sSfL https://pwn.red/pow", b"cat pow.sh") pow_result = check_output(pow_chal, shell=True) io.send(pow_result) io.sendline(patch_b64) print(io.recvuntil(b"}").decode()) break except EOFError: pass

Connecting... Connecting... [...] Connecting... solution: Enter a base64 encoded 40x40 image patch. LISAN AL GAIB UMDCTF{sandworms_love_adversarial_patches}